Signal-based marketing is only as good as the data that powers it. If your tracking is inconsistent, your CRM is cluttered, or identity fields are unreliable, even the best intent platforms or trigger workflows will produce noise instead of value. This article outlines the essential tracking, identity resolution, and data hygiene practices that ensure your signals fire when they should and mean something when they do. Forget short-term fixes like more tools or enrichment layers. Instead, start by building a data model that supports reliable automation.

Why Most Signal-Based Plays Fail Without Strong Data Foundations

When poor tracking causes false signals or misfires

Signals are only useful if they reflect real user behaviour. A missed form fill, a duplicated lead source, or an untagged ad click can throw off your entire automation flow. Signal-based systems often fail not because the strategy is flawed, but because the underlying tracking is incomplete or inconsistent.

According to Snowplow's guide on event tracking design, one poorly implemented event can cause major misinterpretation in downstream analysis. That’s a problem when your automation depends on a single button click or key page view.

Trust in automation breaks without data clarity

RevOps teams rely on signals to route leads, score intent, and trigger outbound. But if marketers can’t explain how a lead scored “hot” or why a sales rep was notified, trust erodes. Automation without transparency leads to confusion and eventual disengagement from sales.

Worse, it creates internal finger-pointing. The signal says the lead is interested, but sales says the account has gone cold. Often, both are right. The issue lies in the data logic behind the trigger.

Symptoms of a weak signal data setup

If you’re experiencing any of the following, it’s time to audit your setup:

High volumes of leads triggered by minor or irrelevant behaviours

Sales ignoring leads because they “don’t trust the scoring”

Duplicate or unassigned records in CRM

Alerts firing with incomplete context

Enrichment tools returning inconsistent or conflicting data

Before scaling signal-based automation, these cracks need to be addressed. Many of these issues occur because the data model is not built to capture the strongest buyer intent signals, not every possible event.

The Minimum Data Model Required to Support Signals

Core events to track (form fills, product views, key page visits)

At a baseline, signal-based systems need to monitor specific behavioural events. These include:

Demo or contact form submissions

Visits to pricing, case study, or integration pages

Product usage milestones (e.g. trial activation)

Ad engagements (e.g. LinkedIn lead gen form opens)

Each event should be clearly named, deduplicated, and stored in a central warehouse. Segment recommends defining a shared schema to keep tracking consistent across marketing, product, and sales tools.

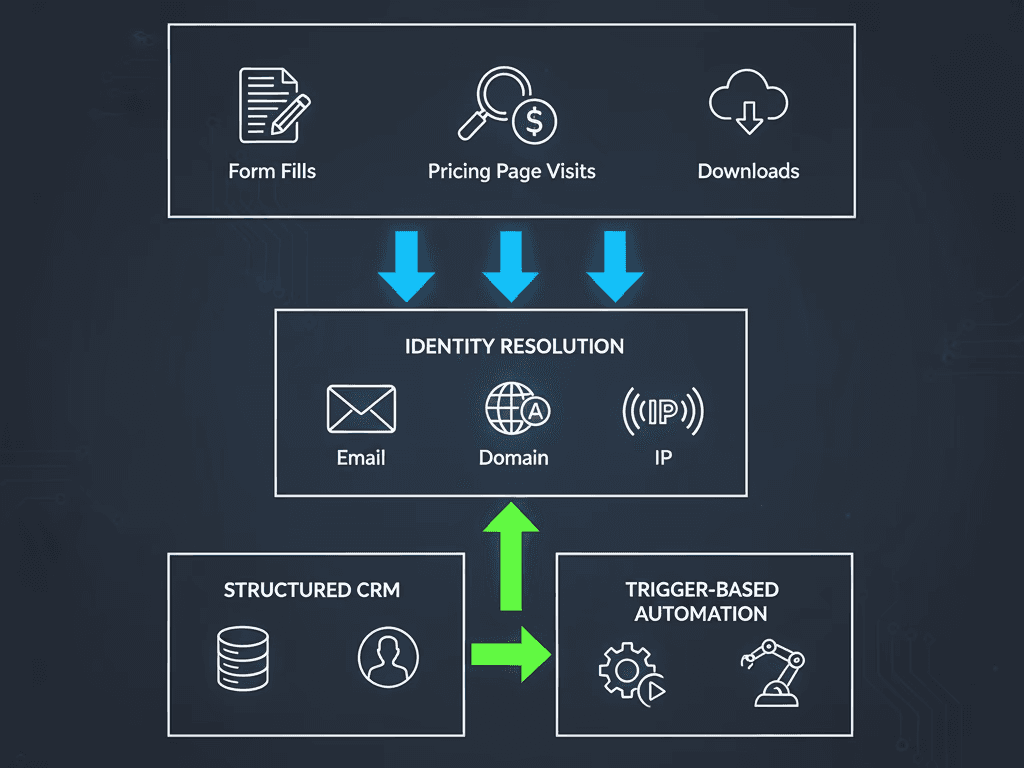

Essential identity resolution fields (email, domain, IP)

Behavioural signals are only valuable if you know who triggered them. That’s where identity resolution comes in. Key fields to collect and standardise include:

Business email address (not just personal)

Company domain

IP address (for company-level intent)

Device ID or cookie for anonymous session linking

Platforms like Clearbit and 6sense provide best-practice models for resolving identity across anonymous and known states.

Lead-to-account matching and enrichment basics

Signal-based plays are often account-level, even if the trigger is contact-level. That means every lead must be matched to an account, either via domain logic or enrichment tools. Basic enrichment fields should include:

Industry

Company size

Revenue bracket

Tech stack

Job title seniority

Without this, scoring and routing are unreliable. And without routing, signal-based automation has nowhere to go. That’s why these foundations are what your signal-based automation depends on.

Tracking Setup Must-Haves

Clean UTM tagging and naming conventions

Every inbound source should be consistently tracked with UTMs. This is especially important for intent triggers like ad clicks or social shares. Inconsistent UTM values lead to attribution issues and make signals harder to trust.

Define a standard format for:

utm_source (e.g. linkedin, google)

utm_medium (e.g. cpc, email)

utm_campaign (e.g. q4-demand-gen)

utm_content (e.g. demo-form-button)

This allows you to analyse signal quality by channel and campaign. Well-structured UTMs help ensure social behaviour is captured as cleanly as web and email activity.

Event tagging across website, product, and ads

Event tracking shouldn’t stop at the website. Product usage events (like feature adoption or logins) are often the best predictors of intent. Likewise, ad platform signals, especially from LinkedIn or Meta, can be enriched if they are tracked with the right identifiers.

Use tools like RudderStack or Segment to unify these events and push them into your CDP, CRM, and analytics layer.

CRM field structure that supports signal ingestion

Once a signal is triggered, your CRM needs to handle it. That means:

Dedicated fields for intent source and timestamp

Signal history logs (not overwriting previous triggers)

Mappings from signals to lead status changes or scoring

Clear visibility for sales teams (e.g. “Signal: Viewed pricing page on 15 Nov”)

Salesforce and HubSpot both offer templates for signal capture fields that work well across B2B teams. This ensures you build signal-based automation on something solid.

Data Hygiene and Normalisation Requirements

How bad form data ruins downstream automation

If your forms are letting through junk data, like job titles in all caps, free email domains, or bot traffic, then every automation flow built on that data is compromised. One fake lead with a high score can erode trust quickly.

Tools like Clearbit Forms or reCAPTCHA v3 can help clean inputs at the source.

Standardising field formats (job titles, industry, country)

Normalising your inputs avoids fragmentation across tools. Examples:

“Marketing Director” vs “Director of Marketing” → standardise to role level + function

“UK” vs “United Kingdom” vs “Great Britain” → set to ISO 3166 country codes

“FinTech” vs “Financial Technology” → use agreed sector naming

Your CRM should apply field validation and dropdowns wherever possible to reduce variation. You’ll also want to ensure you have the extra fields and identifiers needed for social intent.

Handling duplicates, bots, and noisy enrichment

Run regular audits to:

Merge duplicate contacts and accounts

Suppress known bot behaviours or spam

De-dupe enrichment data when multiple sources conflict

The goal is to reduce signal noise so that every alert or trigger is based on a clean, accurate record.

How to Know If You’re Ready to Scale Signal-Based Workflows

Test signals with dummy workflows

Before pushing signals to production, create test workflows with dummy records. Run through the sequence:

Trigger a signal (e.g. pricing page visit)

Ensure CRM updates with timestamp and source

Check if routing or scoring fires as expected

Confirm sales receives a clear explanation of why

Test different segments (SMB vs enterprise) to ensure logic holds across the board. If gaps emerge, you may be layering on tools too soon. Take time to assess the foundation before you start layering more tools on top.

CRM checklist for signal-readiness

Are all leads matched to accounts?

Do fields have validation and naming conventions?

Are duplicate detection and merge rules in place?

Are signal fields visible and understood by sales?

This list helps align marketing ops, RevOps, and CRM admins before launch.

Metrics that show your data model is stable

Look for:

High match rates between leads and accounts

Low enrichment error rates (<5%)

Consistent signal-to-opportunity conversion across segments

Low false positive rate on hot lead alerts

If these metrics hold over time, you’re in a good place to scale with a stack that respects the data standards you have set.

Build the Foundation Before the Funnel

Signal-based marketing is only powerful if the data supporting it is accurate, complete, and structured. Without reliable tracking, clean identity fields, and a CRM that can digest it all, even the best automation strategies will fall flat. Build the stack deliberately, from UTM hygiene to identity resolution, so you choose tools that fit your data model rather than fight it.

Not sure if your data stack is ready for signal-based marketing? Let’s take a look together and build the foundation right.

FAQs

What is the minimum data required for signal-based marketing to work?

At a minimum, you need clean event tracking (form fills, key page visits), identity resolution fields (email, domain, IP), and consistent lead-to-account matching with enrichment data like industry, job title, and company size.

How can I tell if my signals are misfiring due to poor tracking?

Look for symptoms like irrelevant alerts, duplicate records, low trust from sales, and confusing lead scoring. These are signs your tracking, UTM structure, or CRM fields are not aligned.

What tools help unify event tracking and identity resolution?

Platforms like Segment, RudderStack, and Snowplow support event tagging. For identity resolution, tools like Clearbit and 6sense offer frameworks to connect anonymous and known visitor data.

How do I test if my CRM is ready for signal automation?

Run dummy workflows to validate signal ingestion. Check if events update CRM fields, score leads appropriately, and notify sales with context. Use a readiness checklist to ensure fields, matching, and de-duplication rules are in place.

Why do signal-based automations often fail after implementation?

Many automations fail not because the strategy is wrong, but because the underlying data model is weak. Signals depend on consistent tracking, identity mapping, and CRM alignment. Fix the foundations before scaling workflows.